English

Robot avatar – a means of human telepresence in space

Introduction

Space is an extremely hostile environment in which human physiology is not adapted. Lack of gravity causes atrophy of cross-striped musculature (muscles of the torso and limbs, myocardial), demineralization of bone tissue, accompanied by an increase in bone fragility, fluid redistribution and dehydration of the body, cardiovascular disorders, changes in sensory perception, disturbance of proprioception, balance, distortion of body movement control.

Exposure to cosmic radiation, in particular heavy charged particles, leads to the death of living cells of human organs and tissues, causes mutations and reactions, which can result in failure of the immune system, bone marrow system, the development of cancer, degeneration of brain tissue, accompanied by memory impairment, cognitive abilities, and more.

For the normal existence of a person it is necessary to have a magnetic field with characteristics similar to the characteristics of the Earth's magnetic field. Otherwise, hypomagnetic conditions will have a negative impact, the study of which is currently ongoing.

The more knowledge we gain about the cosmos, the less confident we are that a person will be able to live and work there unhindered. And without the presence of man, exploration, and even more so the use of space is almost meaningless. In this regard, it would be useful to focus on the creation of human telepresence technologies in hostile environments, including, first and foremost, in space and on the planets of the solar system.

In general, telepresence refers to functionality that allows the telepresence device operator to receive sensory information (video, sound, possibly tactile, etc.) from a remote point using various sensors installed on a stationary or mobile platform. Recently, telepresence technology is also called avatar technology.

Telepresence technology allows the operator to receive information about the results of "their" actions and realize the possibility of almost full-fledged, virtually augmented activity in outer space or on the surface of another planet.

Obviously, telepresence technology, designed for space applications, will imply not only receiving information, but also some active actions remotely asked by a mobile platform (robot-avatar) by a human operator. Thanks to the feedback between the robot's sensors and similar human sensor systems, the operator receives information about the results of "their" actions and thus realizes the possibility of almost full-fledged, virtually augmented activity in outer space or on the surface of another planet.

The purpose of this article is to analyze the prospects for creating and practical use of avatar technology designed for space exploration, exploration and use, as well as to determine the appearance of avatars and systems of pairing robot avatar with human operator.

1. Possible appearance of an avatar robot designed to work in space and on planets with aggressive habitats

For meaningful and quick action in such a hostile environment as space, it is necessary to correspond as much as possible between sensory systems and the executive bodies of the robot and man: decision-making and actions in extreme conditions are more based on reflexes, spontaneous, intuitive actions, rather than on actions clearly understood and consciously planned.

In accordance with the teachings of N.A. Bernstein, success in the process of evolution in the formation of motor control and control in man is associated with a complete understanding of the structure and composition of the developed movement. Therefore, the most effective person can develop motor skills and maneuverability in relation to the anthropomorphic body. Therefore, the robot avatar should be as anthropomorphic as possible. The copy control mode, based on reflexes and natural manipulations of your body, gives a multiple of the gain in speed and accuracy compared to the control with buttons or joysticks.

It is noted that the anthropomorphic robot avatar, controlled remotely in copying mode, could be created at the end of the last century: the level of development of science and technology quite allowed it to do so. The problem is setting such a problem and organizing its solution.

In the table. 1 features some modern anthropomorphic robots, including two space-use robots, Robonaut 2 (USA) and FEDOR (Russia), capable of operating in limited telepresence mode.

Modern robots are composite metal-plastic structures with high mobility electromechanical drives (up to 50 drives). Control systems are built on the basis of a distributed computing system with software based on different algorithms, which usually represent the know-how of the developer.

The development of the Robonaut space robot began in 1997, but the first series of robots, released in the early 2000s, never flew into space. In 2006, General Motors became interested in the project. In February 2010, the first R2 was demonstrated. On February 24, 2011, Robonaut was taken to the ISS, where he still performs some of the activities required during planned experiments.

Until 2012, operators on Earth, operating the R2 robot aboard the ISS in avatar mode, practiced using various switches, as well as cleaning handrails on board the station.

Table 1. Results of the creation of anthropomorphic robots

|

Robot, year of development, developer |

|

|

||||||

|

Robonaut 2 ((R2)), 2010, General Motors and NASA with the assistance of Oceaneering Space Systems, USA |

|

Height - 101.6 cm (from the waist to the head). Shoulder width: 78.74 cm. Weight - 149.7 kg. |

||||||

|

Eccerobot (Embodied Cognition in a Compliantly Engineered Robot), 2011, University of Sussex, UK |

|

Height - 105 cm. Shoulder width is 48 cm. Weight - 26 kg. |

||||||

|

Atlas, 2016, Boston Dynamics (as part of the competition announced by DARPA), USA |

|

Height - 150 cm. Weight - 80 kg. The speed of movement is 5.4 km/h. The carryable load is 11 kg. Vision - based on lidar and stereo camera. The number of joints is 28. The source of energy is the battery. High strength-to-weight ratio. There are modes of autonomous movement and movement in tele-control mode. Designed for outdoor use and inside buildings. It is performed with both electric and hydraulic drive. |

||||||

|

FEDOR (Final Experimental Demonstration Object Research), 2014-2019, Android Technology NGO and The Advanced Research Foundation [4, 5] |

|

Height - 184 cm. The shoulder width is 52 cm (48 cm in the space version). Weight - 160 kg (105 in the space version). The power plant has a capacity of 13.5 kW. The power system is double: on cable and from the built-in battery. Two built-in AKBs from the Orlan suit were used in preparation for the space mission. The duration of offline work is 1 hour. The number of servos is 48. To protect against rapid cooling at low temperatures is equipped with a heating system of important nodes. |

||||||

The anthropomorphic FEDOR robot, equipped with a speech recognition and synthesis system, flew to the International Space Station on the Soyuz MS-14 spacecraft in the pilot's central seat in August 2019. The robot is able to operate in avatar mode under the control of the operator through the satellite communication system.

From the table. 1 anthropomorphic robots are as similar as possible to humans in terms of Eccerobot anatomy. It has about a hundred artificial muscles responsible for movement. Especially developed in the machine facial expressions.

In terms of coordination and speed of movement on two legs, the most advanced Atlas robot, capable of moving on rough terrain, as well as climbing vertical surfaces with the help of hands and feet, doing flips, etc. The first version, released in 2013, was equipped with a cable through which power supply was supplied and controlled over the robot. The new modification, dubbed Atlas Unplugged (Wireless Atlas), runs on a battery and uses wireless control.

Unlike existing, promising avatar robots designed for space applications should:

- Fully implement the principles of remote human telepresence;

- To have a sensory system and a system of executive bodies that correspond to the maximum possible human anatomy;

- To have the limited (partial) autonomy required in a situation of great delay or even interruption of communication with a person-operator;

- To be resistant to the current adverse factors of outer space or other planets.

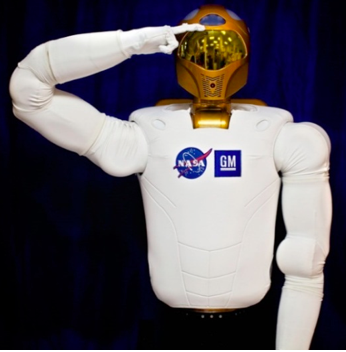

Unlike the first requirement, the rest can be implemented on the basis of existing technologies (Figure 1).

However, the key difference between avatars and conventional telepresence robots is that the avatar must be "synchronized" with the human operator: transmit the full range of signals - environmental data - and repeat its movements, obeying the control commands formed by the "robot avatar- human operator" interface. In this case, information signals can be transmitted through a cellular or satellite channel with a corresponding delay, increasing as the distance between the robot and the human.

The key difference between avatars and conventional telepresence robots is that the avatar must be "synchronized" with the human operator: transmit the full range of signals - environmental data - and repeat its movements, obeying the control commands that are formed by the "robot avatar- human operator" interface.

In March 2018, the XPRI'E Foundation announced the launch of a competition to create avatar robots. The competition will be sponsored by All Nippon Airways (ANA) and the prize pool will be $10 million. The competition itself will be held in 2021. The winning team will be announced in early 2022.

Figure 1. Technology of anthropomorphic robots

2. Interface between a human operator and an avatar robot

Two possible implementation directions between the avatar robot and the human are currently being considered:

- based on the robot's repetition of real physical human movements (the person is in a special telepresence suit)

- based on a neurointerface (a person is still lying or sitting).

A telepresence costume is a device that provides a two-way connection between "man-avatar" and "avatar - man." The system of recreating reality should be as accurate as possible. It is obvious that the real conditions in which the robot will be located, for a person who is in the mode of the avatar operator, will be virtual. The most difficult task lies in the transmission of information about the real interaction with the environment from robot to man and adequate perception of it by man.

The telepresence suit is an exoskeleton or soft exo-shell with built-in sensors and a VR helmet or VR glasses (Figure 2).

The prototype of the telepresence suit may be an exoskeleton suit ("external" skeleton), which is an automatic design designed to restore the lost or strengthen the existing functions of the human musculoskeletal apparatus.

The exoskeleton in the telepresence suit mode transmits signals from the human-operator's musculoskeletal apparatus to the robot avatar. At the same time, the robot avatar transmits information to humans from its sensors, in fact, enhancing the operator's sensory abilities.

Exoskeleton in the suit mode of telepresence performs the opposite (in relation to the above) function: transmission of signals from the musculoskeletal apparatus of the human operator to the robot avatar. At the same time, the robot avatar transmits information to humans from its sensors, in fact, enhancing the operator's sensory abilities.

VR helmet or VR glasses are designed to receive audiovisual information from the robot avatar by the operator.

Fig. 2. Exoskeleton - telepresence costume - and its elements

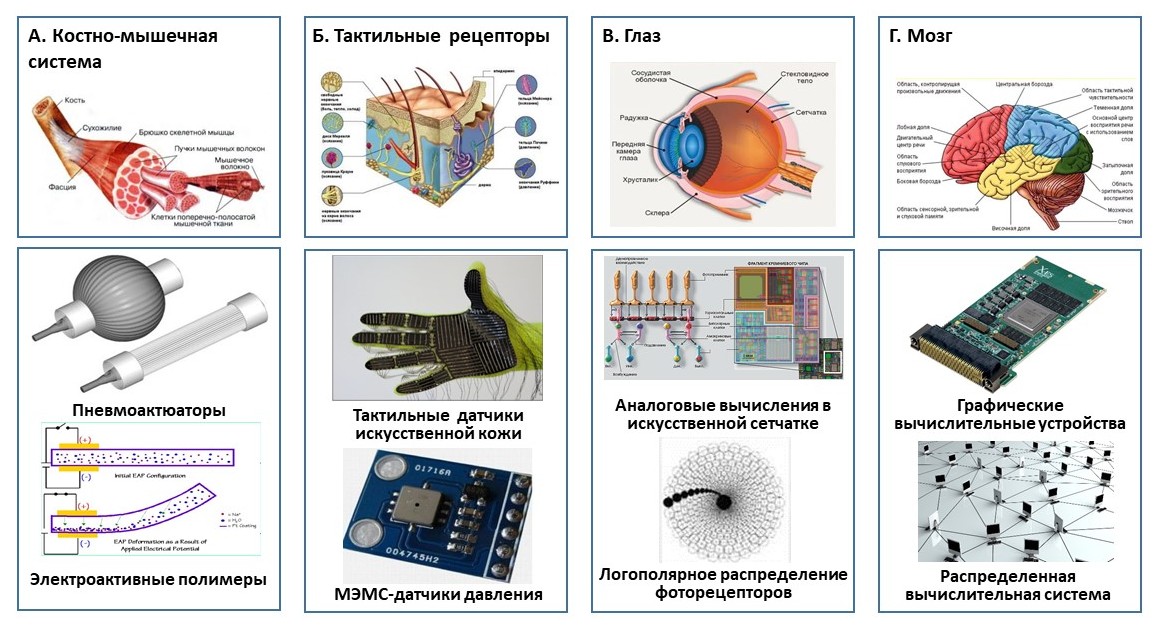

In order to better control the avatar robot, the operator can be placed in a special cardman-type suspension (Figure 3).

At the same time, the cardinal suspension ensures the consistency of the sensations of the operator's vestibular apparatus, signals from the sensors of the limbs of the robot avatar and the video series transmitted by it. The operator, which freely changing its angular position in space, will be able to have the same tilt relative to the horizon as the body of the robot avatar in space or on the surface of another celestial body.

To transmit the movement of the hand and fingers of the hand from the operator to the robot is now proposed to use Intel RealSense technology, which allows you to track not only the palms, but also fingers, and even phalanxes of fingers - up to 22 points on each hand.

Exoskeleton (exobol) is equipped with different types of sensors and communication devices in both directions: from human to robot avatar and from avatar to person. When registering movement, the angles, velocities and moments of limb flexion forces in different joints, the force of influence, the speed of movement and the amount of pressure on the surface, as well as the electrical potentials of the muscles (EMG), which allows to optimize the communication between the operator and the exoskeleton and take into account the physiological features of the operator.

Fig. 3. Operator of the robot avatar in the cordan suspension (in gimbal support)

A neurointerface (or "brain-computer" interface) is a device for exchanging information between the brain and the external device. Human thought commands are deciphered by recording the electrical activity of his brain and transferred to a control facility, in the role of which can act any electronic device, including a space robot.

Back in the late 1960s, it became clear that arbitrary management of the activity of neurons responsible for movements is possible without making synchronous movements. This result is the basis of the principle of controlling the robot avatar with the help of the thought of a human operator.

In the early 2000s, several laboratories around the world began to compete with each other in the development of the brain-computer interface. In particular, the researchers implanted multi-electric matrix into the motor cortex of the human brain, allowing operators to control the robotic manipulator. To date, maximum control has been achieved in the control of the anthropomorphic robotic arm.

The signals of electrical activity of the brain can be read with the help of invasive or non-invasive sensors. Invasive sensors are implanted into the cerebral cortex surgically. Such sensors read information from the surrounding neurons and differ in a more accurate and clean (without interference) signal.

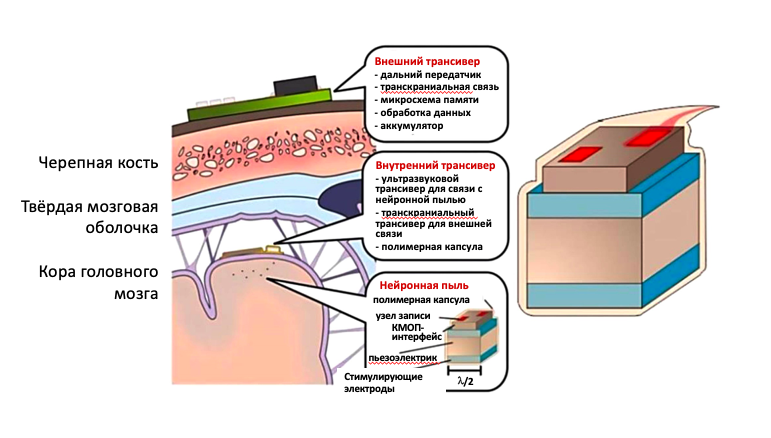

An example of invasive neurointerface is the startup Neuralink (Figure 4), presented by I. Musk in July 2019, a new technology of wireless neurointerface using so-called neural dust. Neural dust particles are 100 microns of silicon sensors that must be injected directly into the cerebral cortex. Nearby, above the solid brain shell, will be a 3-millimeter device that will be able to interact with neural dust with ultrasound.

Fig. 4. Brain-computer interface of the type of "neural dust" ("neural lace")

The non-invasive interface is much safer from a medical point of view. In a private case, it may be a helmet or cap with electrodes to remove the electroencephalogram (EEG electrodes) and is put on the head. The accuracy of such a device is slightly lower than [20, 21].

By the way the brain is activated, the method can be independent (endogenous activation - the imagination of movement) and dependent (exogenous activation - demonstration of movement on the screen). In the first case, slow cortical potentials, mu- (8-12 Hz), beta (18-30 Hz) and gamma rhythms (30-70 Hz) are used for control. In the second case, focusing on external visual stimulus leads to a well-expressed reaction of the visual cortex in comparison with the reaction to the stimulus left unattended, and the intentions of the operator are deciphered on the basis of pre-recorded different reactions to noticed and ignored stimuli.

In addition to EEG, magnetoencephalography (MEG) can be used to create a non-invasive neurointerface using superconducting quantum magnetometers. MEG provides better temporal and spatial resolution than EEG.

Other non-invasive methods of recording brain activity (tracking the concentration of oxygemoglobin and deoxygemoglobin in the brain bloodstream through near-infrared spectroscopy - BIX, functional magnetic resonance imaging, etc.) have worse characteristics for time delay of signal, more complex hardware implementation, etc.

3. Protect the human operator from extreme sensory signals of the robot avatar.

In the case of the most realistic transmission of information to the operator, when the robot avatar gets into an extreme situation - collision with the object, fall from height, strong mechanical impact, high pressure, bright flashes, intolerable sounds and so on - there is a risk of harm to human health associated with the device. Therefore, all pairing systems should be configured to take into account a certain range of action and an instant disconnection of synchronization in the event of such a danger and exceeding acceptable thresholds.

There are also other dangers associated with the management of the avatar robot, caused mainly by the negative side effects of virtual reality on the human body. Among such effects: general discomfort, headache, dizziness, disruption of cardiovascular and central nervous systems, etc.

In this regard, it will be necessary to develop a system of medical and psychological prevention for avatar operators.

Conclusion

As there is an increase in knowledge about the effects of space factors on the human body, the need to develop robotic avatar technology will become increasingly aware.

Despite the loud statements about the rapid scheduled flights to the Moon, about the future colonization of Mars and other planets, the reality presents a completely different picture.

In our opinion, confident movement forward at this historic stage of space exploration and use is possible only with the use of robotic avatars as a means of human telepresence in space.

Avatars could be given the following functions:

- Extra-ship activities in outer space;

- Visual and planetary (with the help of instruments) studies of celestial bodies of the solar system, including in extreme conditions (increased gravity, aggressive environment, high temperature and pressure, etc.);

- Construction and installation work in space, including the assembly of large structures;

- Maintenance of production modules;

- Rescue operations in space and on the surface of celestial bodies, etc.

The control of the avatar robot could be carried out from an orbiting (space) station or from a planetary base, well protected from adverse factors of outer space, or even from the Earth.

References

1. Boyko A. Teleprisutstvie. Roboty teleprisutstviya. RoboTrends. Available at: http://robotrends.ru/robopedia/teleprisutstvie.-roboty-teleprisutstviya (Retrieval date: 25.02.2020).

2. The underwater anthropomorphous robot - avatar, or why the underwater robot must have legs. Available at: http://streltsovaleks.narod.ru (Retrieval date: 02.02.2020).

3. Bernshteyn N.A. Fiziologiya dvizheniy i aktivnost'. Ed. O. G. Gazenko. Moscow, Nauka, 1990. 494 p.

4. Robots. Your Guide to the World of Robotics. Robots. Available at: https://robots.ieee.org/robots/ (Retrieval date: 25.01.2020).

5. Robot Fedor: osobennosti, kharakteristiki i naznachenie. Robo Sapiens. Portal o robototekhnike. 2017. Nov 08. Available at: https://robo-sapiens.ru/stati/robot-fedor/ (Retrieval date: 25.01.2020).

6. Boyko A. Avatary. RoboTrends. Available at: http://robotrends.ru/robopedia/avatary (Retrieval date: 01.02.2020).

7. Sozdanie robota-avatara: chto uzhe izvestno o konkurse XPRIZE. Robo Hunter. Novosti. 2018. Sept 14. Available at: https://robo-hunter.com/news/sozdanie-robota-avatara-chto-uje-izvestno-o-konkurse-xprize13243 (Retrieval date: 02.02.2020).

8. HP Reverb – obzor novogo VR seta. Khabr. 2019. May 18. Available at: https://habr.com/ru/post/452414/ (Retrieval date: 08.02.2020).

9. Toyota predstavila novogo gumanoidnogo robota T-HR3 seta. Khabr. 2017. Nov 26. Available at: https://habr.com/ru/post/408413/ (Retrieval date: 08.02.2020).

10. ExoHand. New areas for action for man and machine. Festo. Available at: https://www.festo.com/group/en/cms/10233.htm (Retrieval date: 08.02.2020).

11. Ochki virtual'noy real'nosti VR Shinecon. Internet-magazin Ozon. Available at: https://www.ozon.ru/context/detail/id/167297611/?utm_source=yandex_direct&utm_medium=cpc&utm_campaign=product_15500_mspt_dsa_all_tech_Igroviepristavki_normal_46099723&utm_term=_cbrx_2121356 (Retrieval date: 08.02.2020).

12. The method of Streltsov's of compulsory angular orientation of a head and pelvis of the person-operator in the support mechanism, which we uses for remote control a humanoid robot avatar. Available at: http://streltsovaleks.narod.ru/HeadAndPelvis.html (Retrieval date: 09.02.2020).

13. Tekhnologiya Intel® RealSense™. Intel. Available at: http://www.intel.ru/content/www/ru/ru/architecture-and-technology/realsense-overview.html (Retrieval date: 01.03.2020).

14. Patton J., Mussa-Ivaldi F. Robot-assisted adaptive training: custom force fields for teaching movement patterns. IEEE Transactions on Biomedical Engineering, 2004, vol. 51, no. 4, pp. 636 – 646.

15. Levitskaya O. S., Lebedev M. A. Interfeys mozg – komp'yuter: budushchee v nastoyashchem. Vestnik RGMU, 2016, no. 2, pp. 4 – 16.

16. Fetz E. E. Operant conditioning of cortical unit activity. Science, 1969, Feb 28, vol. 163, iss. 3870, pp. 955 – 958.

17. Hochberg L.R., Bacher D., Jarosiewicz B., Masse N.Y., Simeral J.D., Vogel J. et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature, 2012, May 16, no. 485, pp. 372 – 375. DOI: https://doi.org/10.1038/nature11076

18. Collinger J.L., Wodlinger B., Downey J.E., Wang W., Tyler-Kabara E.C., Weber D.J. et al. High-performance neuroprosthetic control by an individual with tetraplegia. Lancet, 2013, Feb 16, vol. 381, iss. 9886, pp. 557 – 564. DOI: 10.1016/S0140-6736(12)61816-9

19. An integrated brain-machine interface platform with thousands of channels. Available at: https://www.documentcloud.org/documents/6204648-Neuralink-White-Paper.html (Retrieval date: 01.03.2020).

20. Klopot S. Neyrointerfeys «Rostekha» postupit v prodazhu v 2019 godu. 365-invest. Available at: https://365-invest.com/neyrointerfeys-rosteha-postupit-v-prodazhu-v-2019-godu/ (Retrieval date: 01.03.2020).

21. Golovanov G. Sozdan pervyy effektivnyy neinvazivnyy interfeys. Khaytek+. 2019. Jun 20. Available at: https://hightech.plus/2019/06/20/sozdan-pervii-effektivnii-neinvazivnii-neirointerfeis (Retrieval date: 01.03.2020).

22. McFarland D.J., Krusienski D.J., Wolpaw J.R. Brain-computer interface signal processing at the Wadsworth Center: mu and sensorimotor beta rhythms. Progress in Brain Research, 2006, vol. 159, pp. 411 – 419. DOI: 10.1016/S0079-6123(06)59026-0

23. Mellinger J., Schalk G., Braun Ch., Preissl H., Rosenstiel W., Birbaumer N., Kübler A. An MEG-based brain-computer interface (BCI). Neuroimage, 2007, Jul 1, vol. 36, no. 3, pp. 581 – 593. DOI: 10.1201/b11821-21

24. Kim H.K., Park J., Choi Y., Choe M. Virtual reality sickness questionnaire (VRSQ): Motion sickness measurement index in a virtual reality environment. Applied Ergonomics, 2018, vol. 69, pp. 66 – 73.

© V.Y. Klyushnikov, S.A. Rodkina, 2020

Avatar (Skt. अवतार, avatāra - "descent") is a term in the philosophy of Hinduism, commonly used to refer to the descent of a deity on earth, its embodiment in human form.